프로그램

[파이썬] CNN Stacked AutoEncoder 기반의 Time-series Denoising(노이즈 제거)

오디세이99

2023. 7. 18. 10:19

728x90

반응형

import numpy as np

import matplotlib.pyplot as plt

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Conv1D, MaxPooling1D, UpSampling1D

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from tensorflow.keras.callbacks import Callback

# Generate clean sine wave

data_size = 2000 # Increased data size

t = np.linspace(0, 10, data_size)

clean_data = np.sin(t)

# Add uniform noise

noise = np.random.uniform(-0.1, 0.1, data_size)

noisy_data = clean_data + noise

# Normalize data

scaler = MinMaxScaler(feature_range=(0, 1)) # Normalization to [0, 1]

noisy_data = scaler.fit_transform(noisy_data.reshape(-1, 1))

# Reshape the data to a 2D array (necessary for the convolutional autoencoder)

noisy_data = noisy_data.reshape((-1, 1))

# Parameters

input_shape = (None, 1) # Any number of time steps with 1 feature

conv_filters = [32, 16, 8] # three layers with 32, 16, 8 filters

kernel_size = 3 # Size of the convolving kernel

pool_size = 2 # Size of the max pooling windows

# Input

input_layer = Input(shape=input_shape)

# Encoder

x = Conv1D(conv_filters[0], kernel_size, activation='relu', padding='same')(input_layer)

x = MaxPooling1D(pool_size)(x)

x = Conv1D(conv_filters[1], kernel_size, activation='relu', padding='same')(x)

x = MaxPooling1D(pool_size)(x)

x = Conv1D(conv_filters[2], kernel_size, activation='relu', padding='same')(x)

encoded = MaxPooling1D(pool_size)(x)

# Decoder

x = Conv1D(conv_filters[2], kernel_size, activation='relu', padding='same')(encoded)

x = UpSampling1D(pool_size)(x)

x = Conv1D(conv_filters[1], kernel_size, activation='relu', padding='same')(x)

x = UpSampling1D(pool_size)(x)

x = Conv1D(conv_filters[0], kernel_size, activation='relu', padding='same')(x)

x = UpSampling1D(pool_size)(x)

decoded = Conv1D(1, kernel_size, activation='sigmoid', padding='same')(x)

# Autoencoder

autoencoder = Model(input_layer, decoded)

autoencoder.compile(optimizer='adam', loss='mse')

# Print a summary of the model

autoencoder.summary()

class CustomCallback(Callback): # 100 epoch마다 보임

def on_epoch_end(self, epoch, logs=None):

if epoch % 100 == 0: # Check if this is the 100th epoch

print(f"After epoch {epoch}, loss is {logs['loss']}, val_loss is {logs['val_loss']}")

# Split data into train and test sets

train_data, test_data = train_test_split(noisy_data, test_size=0.2, shuffle=False)

# Reshape the data into the format expected by the autoencoder

train_data = train_data.reshape((1, *train_data.shape))

test_data = test_data.reshape((1, *test_data.shape))

# Train the model

history = autoencoder.fit(train_data, train_data, epochs=5000, validation_data=(test_data, test_data), verbose=0, callbacks=[CustomCallback()])

# Predict on the test data

denoised_data = autoencoder.predict(test_data)

# Reshape denoised data to 2D

denoised_data = np.squeeze(denoised_data)

denoised_data = denoised_data.reshape(-1, 1) # Convert to 2D array

# Invert normalization

noisy_data = scaler.inverse_transform(noisy_data)

denoised_data = scaler.inverse_transform(denoised_data)

# Calculate the start and end index of the test data

start_idx = int(0.8 * len(t)) # 80% of the data is used for training

end_idx = start_idx + len(test_data[0]) # The remainder is used for testing

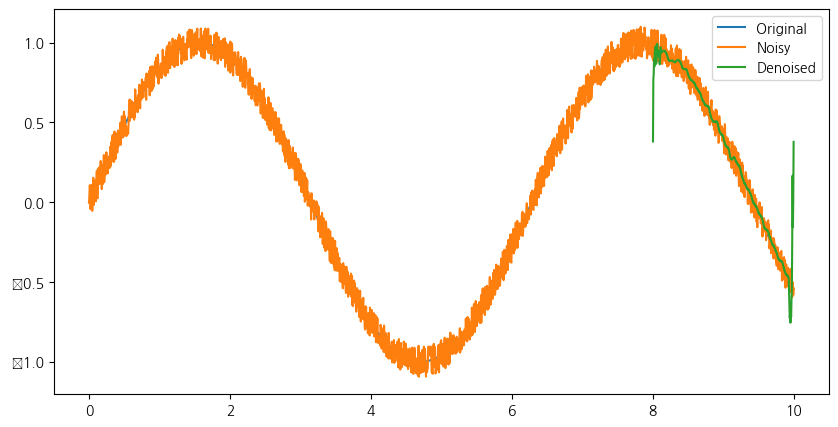

# Plot the original, noisy, and denoised data

plt.figure(figsize=(10, 5))

plt.plot(t, clean_data, label='Original')

plt.plot(t, noisy_data.flatten(), label='Noisy')

plt.plot(t[start_idx:end_idx], denoised_data.flatten(), label='Denoised')

plt.legend()

plt.show()Model: "model_16"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_17 (InputLayer) [(None, None, 1)] 0

conv1d_112 (Conv1D) (None, None, 32) 128

max_pooling1d_48 (MaxPoolin (None, None, 32) 0

g1D)

conv1d_113 (Conv1D) (None, None, 16) 1552

max_pooling1d_49 (MaxPoolin (None, None, 16) 0

g1D)

conv1d_114 (Conv1D) (None, None, 8) 392

max_pooling1d_50 (MaxPoolin (None, None, 8) 0

g1D)

conv1d_115 (Conv1D) (None, None, 8) 200

up_sampling1d_48 (UpSamplin (None, None, 8) 0

g1D)

conv1d_116 (Conv1D) (None, None, 16) 400

up_sampling1d_49 (UpSamplin (None, None, 16) 0

g1D)

conv1d_117 (Conv1D) (None, None, 32) 1568

up_sampling1d_50 (UpSamplin (None, None, 32) 0

g1D)

conv1d_118 (Conv1D) (None, None, 1) 97

=================================================================

Total params: 4,337

Trainable params: 4,337

Non-trainable params: 0

_________________________________________________________________

After epoch 0, loss is 0.10450702905654907, val_loss is 0.06911314278841019

After epoch 100, loss is 0.003051506821066141, val_loss is 0.0035117107909172773

After epoch 200, loss is 0.0007455113809555769, val_loss is 0.0023853301536291838

After epoch 300, loss is 0.0007021011551842093, val_loss is 0.002051988150924444

After epoch 400, loss is 0.0006892073433846235, val_loss is 0.001874042907729745

After epoch 500, loss is 0.0006814108928665519, val_loss is 0.0017645710613578558

After epoch 600, loss is 0.0006783285061828792, val_loss is 0.0017318697646260262

After epoch 700, loss is 0.0006759861134923995, val_loss is 0.0017360710771754384

After epoch 800, loss is 0.000673560076393187, val_loss is 0.0017452369211241603

After epoch 900, loss is 0.0006985874497331679, val_loss is 0.0017664558254182339

After epoch 1000, loss is 0.0006707648863084614, val_loss is 0.001741617452353239

After epoch 1100, loss is 0.0006916309357620776, val_loss is 0.0017732185078784823

After epoch 1200, loss is 0.0006682073581032455, val_loss is 0.0017409463180229068

After epoch 1300, loss is 0.000674318231176585, val_loss is 0.0017417854396626353

After epoch 1400, loss is 0.0006667553097940981, val_loss is 0.0017520369729027152

After epoch 1500, loss is 0.0006646261899732053, val_loss is 0.0017481767572462559

After epoch 1600, loss is 0.0006694296607747674, val_loss is 0.0017526986775919795

After epoch 1700, loss is 0.0006620572530664504, val_loss is 0.0017598049016669393

After epoch 1800, loss is 0.0006611980497837067, val_loss is 0.0017639095894992352

After epoch 1900, loss is 0.0006602614303119481, val_loss is 0.0017728423699736595

After epoch 2000, loss is 0.0006668126443400979, val_loss is 0.0017951461486518383

After epoch 2100, loss is 0.0006750418106094003, val_loss is 0.0018031119834631681

After epoch 2200, loss is 0.0006573552382178605, val_loss is 0.0017947613960132003

After epoch 2300, loss is 0.0006586895906366408, val_loss is 0.0018061655573546886

After epoch 2400, loss is 0.0006646490073762834, val_loss is 0.0018159964820370078

After epoch 2500, loss is 0.000656965421512723, val_loss is 0.0018387611489742994

After epoch 2600, loss is 0.0006550662219524384, val_loss is 0.00186380953527987

After epoch 2700, loss is 0.0006501793977804482, val_loss is 0.001874843379482627

After epoch 2800, loss is 0.0006549088866449893, val_loss is 0.0018747906433418393

After epoch 2900, loss is 0.0007012670976109803, val_loss is 0.0019860200118273497

After epoch 3000, loss is 0.0006486615748144686, val_loss is 0.0018858775729313493

After epoch 3100, loss is 0.0006473447429016232, val_loss is 0.001890695421025157

After epoch 3200, loss is 0.0006451343651860952, val_loss is 0.0019256609957665205

After epoch 3300, loss is 0.0006439416320063174, val_loss is 0.0019586749840527773

After epoch 3400, loss is 0.0006427128682844341, val_loss is 0.0019668922759592533

After epoch 3500, loss is 0.0006458878633566201, val_loss is 0.0020130136981606483

After epoch 3600, loss is 0.000639669771771878, val_loss is 0.002026270842179656

After epoch 3700, loss is 0.0006386086461134255, val_loss is 0.0020245241466909647

After epoch 3800, loss is 0.0006373562500812113, val_loss is 0.0020193075761198997

After epoch 3900, loss is 0.0006375666125677526, val_loss is 0.002027568407356739

After epoch 4000, loss is 0.0006589865079149604, val_loss is 0.002076950389891863

After epoch 4100, loss is 0.000634072523098439, val_loss is 0.0020566852763295174

After epoch 4200, loss is 0.000635586678981781, val_loss is 0.0020770367700606585

After epoch 4300, loss is 0.0006328367162495852, val_loss is 0.0020998246036469936

After epoch 4400, loss is 0.0006306078284978867, val_loss is 0.0021127136424183846

After epoch 4500, loss is 0.0006279853987507522, val_loss is 0.002122009638696909

After epoch 4600, loss is 0.0006361935520544648, val_loss is 0.002124659949913621

After epoch 4700, loss is 0.0006304665002971888, val_loss is 0.002083962317556143

After epoch 4800, loss is 0.0006321638356894255, val_loss is 0.0021081753075122833

After epoch 4900, loss is 0.0006237715133465827, val_loss is 0.002068204339593649

1/1 [==============================] - 0s 110ms/step

728x90

반응형